Video translation demo

Best Weverse Video Translator Solution

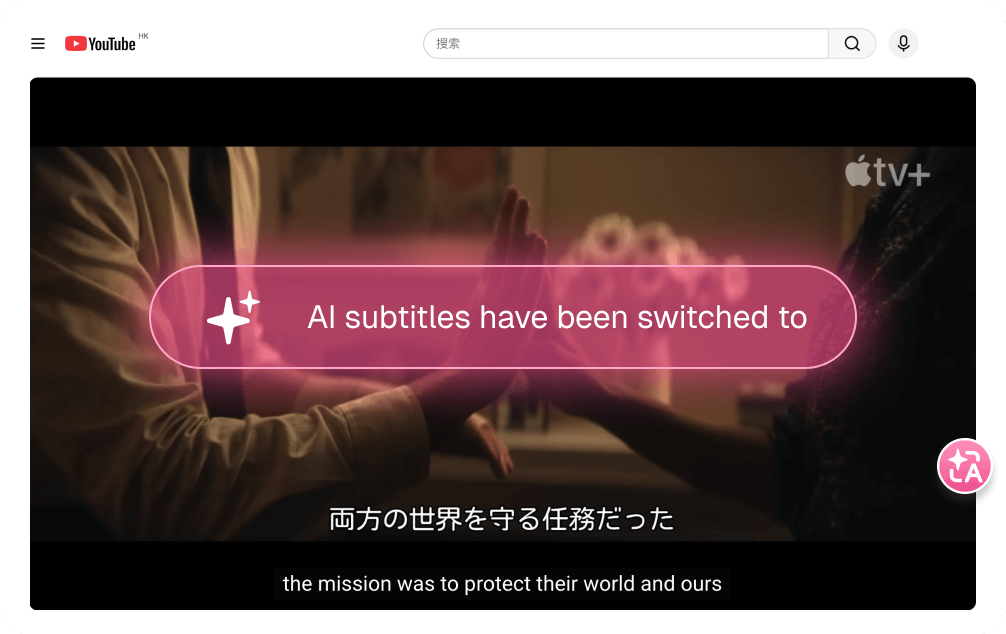

Real-time bilingual subtitles during Weverse video playback

Real-time bilingual subtitles during Weverse video playback Side-by-side Korean and translated text preserves context

Side-by-side Korean and translated text preserves context AI-powered subtitle generation when captions are unavailable

AI-powered subtitle generation when captions are unavailable Instant understanding without downloading or post-processing delays

Instant understanding without downloading or post-processing delaysFour steps to enjoy content in your native language

Copy & paste video link

Click Translate Video and wait a moment

Click Play Immediately to view

Weverse Video Translator That Actually Works

Watch Weverse live streams and videos with instant bilingual subtitles appearing as artists speak, eliminating the frustration of waiting for fan translations or missing crucial moments during live broadcasts.

Our AI models understand K-pop terminology, artist nicknames, and fandom-specific language, delivering translations that capture the actual meaning behind casual conversations and inside jokes between idols and fans.

Original Korean text appears alongside English translations, helping you learn Korean phrases naturally while following your favorite artists' content, making every Weverse video a language learning opportunity without extra effort.

Translate Weverse videos directly in your browser without copying links or switching apps, keeping you immersed in the platform's community experience while understanding every word artists share with global fans.

Access ChatGPT, Claude, Gemini, and DeepSeek models for Weverse subtitle translation, ensuring accurate interpretation of casual speech, slang, and emotional nuances that basic machine translation tools completely miss or misinterpret.

Save and export translated Weverse subtitles for rewatching favorite moments, creating fan content, or sharing translations with fellow fans, turning fleeting live stream moments into permanent accessible memories you control.